Key Takeaways

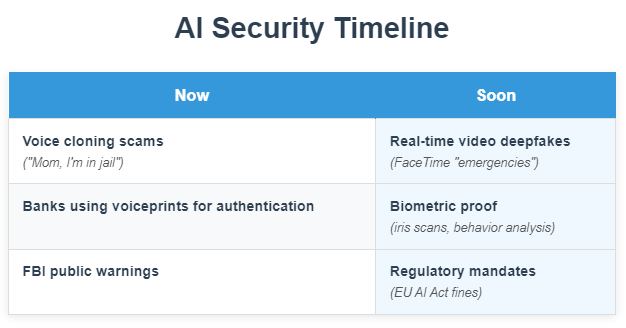

- Sam Altman warns of an imminent AI-driven fraud crisis, citing voice authentication as critically vulnerable .

- Financial institutions still using voiceprints for authentication are called "crazy" by Altman; AI can now flawlessly clone voices and soon video .

- The FBI confirms AI voice scams are already targeting parents and officials, including impersonations of Marco Rubio .

- Altman advocates for "proof of human" tools like The Orb by Tools for Humanity and urges overhauled authentication systems .

- Regulatory gaps persist: 85% of countries lack AI policies, while the EU’s AI Act imposes fines up to 7% of global turnover for violations .

Voiceprints Are Dead

Sam Altman stood at the Federal Reserve on Tuesday. He told bankers and regulators something obvious. Voice authentication is suicide. Financial institutions still use voiceprints to move money. Say a phrase. Prove you’re you. Altman called it crazy. AI kills that method. It kills it dead. He knows. His company built some of the tech. Bad actors clone voices now. They clone them perfectly. They steal money. They wreck lives. Parents get calls. Voices sound like their children. Help me. I’m in trouble. Money vanishes. Secretary of State Marco Rubio’s voice faked. Foreign ministers contacted. A governor fooled .

Altman doesn’t blink. “AI has fully defeated most authentication methods,” he said. Except passwords. And even those feel shaky. The Federal Reserve’s top cop, Michelle Bowman, listened. She mumbled about partnerships. Altman didn’t wait for handshakes .

Deepfakes Are Knocking

Video fakes come next. FaceTime calls. Moving images. Your boss asks for a wire transfer. Your lawyer demands urgent payment. All fake. All real enough to trick eyes and ears. Altman sees it barreling toward us. “Indistinguishable from reality,” he said. No one is ready. Banks drag feet. Regulations stall. OpenAI won’t build impersonation tools. But someone will. “This is not super difficult,” Altman shrugged. It’s coming fast. Very damn soon .

The FBI warned about this last year. Everyone nodded. Nothing changed. Now Altman shouts it in Washington’s marble halls. His company opens an office here early next year. Thirty people. Policy meetings. Trainings. Research. They’ll whisper in ears. They’ll say: Fix this .

The Orb and Other Lifelines

Altman backs Tools for Humanity. They built The Orb. A small device. It scans your iris. Proof you’re human. Proof you’re you. Banks haven’t touched it. They cling to voiceprints like 1995 never ended. Altman pushes harder. Authentication needs revolution. Not patches. Not bandaids. Rip the old systems out .

He knows the stakes. Fraud isn’t his only nightmare. Superintelligence haunts him. Rogue AI building bioweapons. Hacking power grids. Enemies racing ahead while America debates. China doesn’t debate. China builds. The White House drafts an “AI Action Plan.” Trump will see it soon. Altman’s team gave recommendations. They want growth. They want dominance. They don’t want handcuffs .

Banks: Scared but Hooked

Wall Street hears Altman. They sweat. Trillion-dollar errors keep them awake. Regulatory hurdles slow AI adoption. But Altman sees cracks in their resistance. Banks whisper back: We have no choice. If they don’t use AI, they die. Simple as that. One banker told him: Survival demands this tech. New controls? Sure. But stop? Impossible .

OpenAI’s economist, Ronnie Chatterji, claims ChatGPT has 500 million users. Half in America are under 34. They tutor themselves with it. They upskill. Banks notice. Young workers walk in with AI habits. They expect tools. They demand efficiency. Banks cave quietly .

Ethics or Obituary

Dr. Mona Ashok at Henley Business School writes plainly: AI without ethics is disaster. Not theory. Fact. A tech company’s hiring tool filtered out women for years. No one noticed. The algorithm learned from history. History favored men. Automated bias. Automated discrimination. It’s everywhere. UK auditors found AI tools filtering job candidates by race, sexuality, gender. Illegal. Running anyway .

She says leaders must ask: Where does data come from? Who gets excluded? Ignore this, and trust evaporates. Brands crumble. The EU’s new AI Act fines companies 7% of global turnover for violations. Biased algorithms? That’s a violation. The FTC hunts deceptive models in the U.S. No more hiding .

AI Fraud: Today vs. Tomorrow

UN Sounds the Alarm

Geneva hosted the AI for Good summit this week. Doreen Bogdan-Martin from the ITU spoke cold truth. The biggest risk isn’t machines killing humans. It’s the rush to embed AI everywhere. No understanding. No guardrails. She looked at the crowd. Governments. Tech giants. Kids with robotics projects. “We are the AI generation,” she said. That means learning fast. Schools. Workplaces. Life .

Eighty-five percent of countries have no AI policy. None. Zero. Regulation trails innovation like a tired dog. The summit showed flying cars. Brain-computer interfaces. An orchard robot. Glittering toys. And beneath it all, fear. Human-level AI predicted within three years. Energy drains. Safety holes. Biases baked deep .

Job Loss? Altman Shrugs

Anthropic’s CEO frets about AI taking jobs. Amazon’s boss agrees. Altman doesn’t. “No one knows,” he says. Predictions are noise. Entire job classes vanish? Sure. New ones emerge. Always do. He imagines a future where work is optional. A “silly status game.” Humans fill time. Feel useful. No real jobs needed. Utopia? Maybe. He doesn’t sweat the details .

Reality check: UK workers spend 3.5 hours weekly on AI tools. Research. Data crunching. Content churn. Sixty-three percent use it. Fifty-six percent feel optimistic. Sixty-one percent feel overwhelmed. They want training. Half their companies offer none. Chaos rules .

The Hallucination Problem

AI lies. Sweetly. Confidently. OpenAI’s newest models hallucinate 33-48% of the time. Google’s too. Apple found “fundamental limitations.” Complex problems break AI logic. Accuracy collapses. Jailbreaks run wild. Israeli researchers tricked chatbots into giving bomb instructions. Dark LLMs spread. Unchecked. Unchallenged .

This isn’t sci-fi. It’s now. Internal communicators scramble. They make rules:

- Fact-check every AI output

- Train staff on limitations

- Audit tools monthly

- Human oversight isn’t luxury. It’s survival. Reputations burn on AI’s pyre of lies .

FAQs

What terrifies Sam Altman most about AI?

Voice authentication in finance. Lax banks. Impersonation tools that let thieves drain accounts with a cloned voice .

How soon does Altman predict an AI fraud crisis?

“Very, very soon.” Video deepfakes will escalate today’s voice scams into widespread chaos .

What solution does Altman support?

Tools like The Orb by Tools for Humanity, which uses iris scans for “proof of human” authentication .

Are regulators acting?

Slowly. The EU’s AI Act bans harmful practices and fines violators up to 7% of global revenue. 85% of nations lack any AI policy .

Do workers fear AI taking jobs?

UK data shows 61% aren’t worried. Most feel overwhelmed but optimistic, wanting training companies won’t provide .

Comments

Post a Comment